The National State Tax Service University of Ukraine

Nbsp; Тексти до Робочої навчальної програми «Іноземна мова» (англійська) для підготовки бакалавра галузі знань 0501 «Інформатика та обчислювальна техніка» напряму підготовки: 6.050101 „Комп’ютерні науки”

Електронний ресурс )

Science

This article is about the general term "Science", particularly as it refers to experimental sciences. For the specific topics of study of scientists, see natura science.

Science (from the Latin scientia, meaning "knowledge") is, in its broadest sense, any systematic knowledge that is capable of resulting in a correct prediction or reliable outcome. In this sense, science may refer to a highly skilled technique, technology, or practice.

In today's more restricted sense, science refers to a system of acquiring knowledge based on scientific method, and to the organized body of knowledge gained through such research. It is a "systematic enterprise of gathering knowledge about the world and organizing and condensing that knowledge into testable laws and theories". This article focuses upon science in this more restricted sense, sometimes called experimental science, and also gives some broader historical context leading up to the modern understanding of the word "science."

From the Middle Ages to the Enlightenment, "science" had more-or-less the same sort of very broad meaning in English that "philosophy" had at that time. By the early 1800s, "natural philosophy" (which eventually evolved into what is today called "natural science") had begun to separate from "philosophy" in general. In many cases, "science" continued to stand for reliable knowledge about any topic, in the same way it is still used in the broad sense in modern terms such as library science, political science, and computer science. In the more narrow sense of "science" today, as natural philosophy became linked to an expanding set of well-defined laws (beginning with Galileo's laws, Kepler's laws, and Newton's laws for motion), it became more common to refer to natural philosophy as "natural science". Over the course of the 1800s, the word "science" become increasingly associated mainly with the disciplined study of the natural world (that is, the non-human world). This sometimes left the study of human thought and society in a linguistic limbo, which has today been resolved by classifying these areas of study as the social sciences.

Basic classifications

Scientific fields are commonly divided into two major groups: natural sciences, which study natural phenomena (including biological life), and social sciences, which study human behavior and societies. These groupings are empirical sciences, which means the knowledge must be based on observable phenomena and capable of being tested for its validity by other researchers working under the same conditions. There are also related disciplines that are grouped into interdisciplinary and applied sciences, such as engineering and health science. Within these categories are specialized scientific fields that can include elements of other scientific disciplines but often possess their own terminology and body of expertise.

Mathematics, which is classified as a formal science, has both similarities and differences with the natural and social sciences. It is similar to empirical sciences in that it involves an objective, careful and systematic study of an area of knowledge; it is different because of its method of verifying its knowledge, using a priori rather than empirical methods. Formal science, which also includes statistics and logic, is vital to the empirical sciences. Major advances in formal science have often led to major advances in the empirical sciences. The formal sciences are essential in the formation of hypotheses, theories, and laws, both in discovering and describing how things work (natural sciences) and how people think and act (social sciences).

Applied science (i.e. engineering) is the practical application of scientific knowledge.

History and etymology

It is widely accepted that 'modern science' arose in the Europe of the 17th century (towards the end of the Renaissance), introducing a new understanding of the natural world. While empirical investigations of the natural world have been described since antiquity (for example, by Aristotle and Pliny the Elder), and scientific methods have been employed since the Middle Ages (for example, by Alhazen and Roger Bacon), the dawn of modern science is generally traced back to the early modern period during what is known as the Scientific Revolution of the 16th and 17th centuries.

The word "science" comes through the Old French, and is derived in turn from the Latin scientia, "knowledge", the nominal form of the verb scire, "to know". The Proto-Indo-European (PIE) root that yields scire is *skei-, meaning to "cut, separate, or discern". Similarly, the Greek word for science is 'επιστήμη', deriving from the verb 'επίσταμαι', 'to know'. From the Middle Ages to the Enlightenment, science or scientia meant any systematic recorded knowledge. Science therefore had the same sort of very broad meaning that philosophy had at that time. In other languages, including French, Spanish, Portuguese, and Italian, the word corresponding to science also carries this meaning.

Prior to the 1700s, the preferred term for the study of nature among English speakers was "natural philosophy", while other philosophical disciplines (e.g., logic, metaphysics, epistemology, ethics and aesthetics) were typically referred to as "moral philosophy". Today, "moral philosophy" is more-or-less synonymous with "ethics". Well into the 1700s, science and natural philosophy were not quite synonymous, but only became so later with the direct use of what would become known formally as the scientific method. By contrast, the word "science" in English was still used in the 17th century (1600s) to refer to the Aristotelian concept of knowledge which was secure enough to be used as a prescription for exactly how to accomplish a specific task. With respect to the transitional usage of the term "natural philosophy" in this period, the philosopher John Locke wrote disparagingly in 1690 that "natural philosophy is not capable of being made a science".

Locke's assertion notwithstanding, by the early 1800s natural philosophy had begun to separate from philosophy, though it often retained a very broad meaning. In many cases, science continued to stand for reliable knowledge about any topic, in the same way it is still used today in the broad sense (see the introduction to this article) in modern terms such as library science, political science, and computer science. In the more narrow sense of science, as natural philosophy became linked to an expanding set of well-defined laws (beginning with Galileo's laws, Kepler's laws, and Newton's laws for motion), it became more popular to refer to natural philosophy as natural science. Over the course of the nineteenth century, moreover, there was an increased tendency to associate science with study of the natural world (that is, the non-human world). This move sometimes left the study of human thought and society (what would come to be called social science) in a linguistic limbo by the end of the century and into the next.

Through the 1800s, many English speakers were increasingly differentiating science (i.e., the natural sciences) from all other forms of knowledge in a variety of ways. The now-familiar expression “scientific method,” which refers to the prescriptive part of how to make discoveries in natural philosophy, was almost unused until then, but became widespread after the 1870s, though there was rarely total agreement about just what it entailed. The word "scientist," meant to refer to a systematically working natural philosopher, (as opposed to an intuitive or empirically minded one) was coined in 1833 by William Whewell.Discussion of scientists as a special group of people who did science, even if their attributes were up for debate, grew in the last half of the 19th century. Whatever people actually meant by these terms at first, they ultimately depicted science, in the narrow sense of the habitual use of the scientific method and the knowledge derived from it, as something deeply distinguished from all other realms of human endeavor.

By the twentieth century (1900s), the modern notion of science as a special kind of knowledge about the world, practiced by a distinct group and pursued through a unique method, was essentially in place. It was used to give legitimacy to a variety of fields through such titles as "scientific" medicine, engineering, advertising, or motherhood. Over the 1900s, links between science and technology also grew increasingly strong. As Martin Rees explains, progress in scientific understanding and technology have been synergistic and vital to one another.

Richard Feynman described science in the following way for his students: "The principle of science, the definition, almost, is the following: The test of all knowledge is experiment. Experiment is the sole judge of scientific 'truth'. But what is the source of knowledge? Where do the laws that are to be tested come from? Experiment, itself, helps to produce these laws, in the sense that it gives us hints. But also needed is imagination to create from these hints the great generalizations — to guess at the wonderful, simple, but very strange patterns beneath them all, and then to experiment to check again whether we have made the right guess." Feynman also observed, "...there is an expanding frontier of ignorance...things must be learned only to be unlearned again or, more likely, to be corrected."

Scientific method

A scientific method seeks to explain the events of nature in a reproducible way, and to use these findings to make useful predictions. This is done partly through observation of natural phenomena, but also through experimentation that tries to simulate natural events under controlled conditions. Taken in its entirety, the scientific method allows for highly creative problem solving whilst minimizing any effects of subjective bias on the part of its users (namely the confirmation bias).

Basic and applied research

Although some scientific research is applied research into specific problems, a great deal of our understanding comes from the curiosity-driven undertaking of basic research. This leads to options for technological advance that were not planned or sometimes even imaginable. This point was made by Michael Faraday when, allegedly in response to the question "what is the use of basic research?" he responded "Sir, what is the use of a new-born child?". For example, research into the effects of red light on the human eye's rod cells did not seem to have any practical purpose; eventually, the discovery that our night vision is not troubled by red light would lead militaries to adopt red light in the cockpits of all jet fighters.

Experimentation and hypothesizing

DNA determines the genetic structure of all known life

The Bohr model of the atom, like many ideas in the history of science, was at first prompted by (and later partially disproved by) experimentation.

Based on observations of a phenomenon,scientists may generate a model. This is an attempt to describe or depict the phenomenon in terms of a logical physical or mathematical representation. As empirical evidence is gathered, scientists can suggest a hypothesis to explain the phenomenon. Hypotheses may be formulated using principles such as parsimony (traditionally known as "Occam's Razor") and are generally expected to seek consilience - fitting well with other accepted facts related to the phenomena. This new explanation is used to make falsifiable predictions that are testable by experiment or observation. When a hypothesis proves unsatisfactory, it is either modified or discarded. Experimentation is especially important in science to help establish a causational relationships (to avoid the correlation fallacy). Operationalization also plays an important role in coordinating research in/across different fields.

Once a hypothesis has survived testing, it may become adopted into the framework of a scientific theory. This is a logically reasoned, self-consistent model or framework for describing the behavior of certain natural phenomena. A theory typically describes the behavior of much broader sets of phenomena than a hypothesis; commonly, a large number of hypotheses can be logically bound together by a single theory. Thus a theory is a hypothesis explaining various other hypotheses. In that vein, theories are formulated according to most of the same scientific principles as hypotheses.

While performing experiments, scientists may have a preference for one outcome over another, and so it is important to ensure that science as a whole can eliminate this bias. This can be achieved by careful experimental design, transparency, and a thorough peer review process of the experimental results as well as any conclusions. After the results of an experiment are announced or published, it is normal practice for independent researchers to double-check how the research was performed, and to follow up by performing similar experiments to determine how dependable the results might be.

Certainty and science

Unlike a mathematical proof, a scientific theory is empirical, and is always open to falsification if new evidence is presented. That is, no theory is ever considered strictly certain as science works under a fallibilistic view. Instead, science is proud to make predictions with great probability, bearing in mind that the most likely event is not always what actually happens. During the Yom Kippur War, cognitive psychologist Daniel Kahneman was asked to explain why one squad of aircraft had returned safely, yet a second squad on the exact same operation had lost all of its planes. Rather than conduct a study in the hope of a new hypothesis, Kahneman simply reiterated the importance of expecting some coincidences in life, explaining that absurdly rare things, by definition, occasionally happen.

Though the scientist believing in evolution admits uncertainty, she is probably correct

Theories very rarely result in vast changes in our understanding. According to psychologist Keith Stanovich, it may be the media's overuse of words like "breakthrough" that leads the public to imagine that science is constantly proving everything it thought was true to be false. While there are such famous cases as the theory of relativity that required a complete reconceptualization, these are extreme exceptions. Knowledge in science is gained by a gradual synthesis of information from different experiments, by various researchers, across different domains of science; it is more like a climb than a leap. Theories vary in the extent to which they have been tested and verified, as well as their acceptance in the scientific community. For example, heliocentric theory, the theory of evolution, and germ theory still bear the name "theory" even though, in practice, they are considered factual.

Philosopher Barry Stroud adds that, although the best definition for "knowledge" is contested, being skeptical and entertaining the possibility that one is incorrect is compatible with being correct. Ironically then, the scientist adhering to proper scientific method will doubt themselves even once they possess the truth.

Stanovich also asserts that science avoids searching for a "magic bullet"; it avoids the single cause fallacy. This means a scientist would not ask merely "What is the cause of...", but rather "What are the most significant causes of...". This is especially the case in the more macroscopic fields of science (e.g. psychology, cosmology). Of course, research often analyzes few factors at once, but this always to add to the long list of factors that are most important to consider. For example: knowing the details of only a person's genetics, or their history and upbringing, or the current situation may not explain a behaviour, but a deep understanding of all these variables combined can be very predictive.

Mathematics

Data from the famous Michelson–Morley experiment

Mathematics is essential to the sciences. One important function of mathematics in science is the role it plays in the expression of scientific models. Observing and collecting measurements, as well as hypothesizing and predicting, often require extensive use of mathematics. Arithmetic, algebra, geometry, trigonometry and calculus, for example, are all essential to physics. Virtually every branch of mathematics has applications in science, including "pure" areas such as number theory and topology.

Statistical methods, which are mathematical techniques for summarizing and analyzing data, allow scientists to assess the level of reliability and the range of variation in experimental results. Statistical analysis plays a fundamental role in many areas of both the natural sciences and social sciences.

Computational science applies computing power to simulate real-world situations, enabling a better understanding of scientific problems than formal mathematics alone can achieve. According to the Society for Industrial and Applied Mathematics, computation is now as important as theory and experiment in advancing scientific knowledge.

Whether mathematics itself is properly classified as science has been a matter of some debate. Some thinkers see mathematicians as scientists, regarding physical experiments as inessential or mathematical proofs as equivalent to experiments. Others do not see mathematics as a science, since it does not require an experimental test of its theories and hypotheses. Mathematical theorems and formulas are obtained by logical derivations which presume axiomatic systems, rather than the combination of empirical observation and logical reasoning that has come to be known as scientific method. In general, mathematics is classified as formal science, while natural and social sciences are classified as empirical sciences.

Scientific community

The Meissner effect causes a magnet to levitate above a superconductor

The scientific community consists of the total body of scientists, its relationships and interactions. It is normally divided into "sub-communities" each working on a particular field within science.

Fields

Fields of science are widely recognized categories of specialized expertise, and typically embody their own terminology and nomenclature. Each field will commonly be represented by one or more scientific journal, where peer reviewed research will be published.

Institutions

Louis XIV visiting the Académie des sciences in 1671

Learned societies for the communication and promotion of scientific thought and experimentation have existed since the Renaissance period. The oldest surviving institution is the Accademia dei Lincei in Italy. National Academy of Sciences are distinguished institutions that exist in a number of countries, beginning with the British Royal Society in 1660 and the French Académie des Sciences in 1666.

International scientific organizations, such as the International Council for Science, have since been formed to promote cooperation between the scientific communities of different nations. More recently, influential government agencies have been created to support scientific research, including the National Science Foundation in the U.S.

Other prominent organizations include the National Scientific and Technical Research Council in Argentina, the academies of science of many nations, CSIRO in Australia, Centre national de la recherche scientifique in France, Max Planck Society and Deutsche Forschungsgemeinschaft in Germany, and in Spain, CSIC.

Literature

An enormous range of scientific literature is published. Scientific journals communicate and document the results of research carried out in universities and various other research institutions, serving as an archival record of science. The first scientific journals, Journal des Sçavans followed by the Philosophical Transactions, began publication in 1665. Since that time the total number of active periodicals has steadily increased. As of 1981, one estimate for the number of scientific and technical journals in publication was 11,500. Today Pubmed lists almost 40,000, related to the medical sciences only.

Most scientific journals cover a single scientific field and publish the research within that field; the research is normally expressed in the form of a scientific paper. Science has become so pervasive in modern societies that it is generally considered necessary to communicate the achievements, news, and ambitions of scientists to a wider populace.

Science magazines such as New Scientist, Science & Vie and Scientific American cater to the needs of a much wider readership and provide a non-technical summary of popular areas of research, including notable discoveries and advances in certain fields of research. Science books engage the interest of many more people. Tangentially, the science fiction genre, primarily fantastic in nature, engages the public imagination and transmits the ideas, if not the methods, of science.

Recent efforts to intensify or develop links between science and non-scientific disciplines such as Literature or, more specifically, Poetry, include the Creative Writing Science resource developed through the Royal Literary Fund.

Philosophy of science

Velocity-distribution data of a gas of rubidium atoms, confirming the discovery of a new phase of matter, the Bose–Einstein condensate

The philosophy of science seeks to understand the nature and justification of scientific knowledge. It has proven difficult to provide a definitive account of scientific method that can decisively serve to distinguish science from non-science. Thus there are legitimate arguments about exactly where the borders are, which is known as the problem of demarcation. There is nonetheless a set of core precepts that have broad consensus among published philosophers of science and within the scientific community at large. For example, it is universally agreed that scientific hypotheses and theories must be capable of being independently tested and verified by other scientists in order to become accepted by the scientific community.

There are different schools of thought in the philosophy of scientific method. Methodological naturalism maintains that scientific investigation must adhere to empirical study and independent verification as a process for properly developing and evaluating natural explanations for observable phenomena. Methodological naturalism, therefore, rejects supernatural explanations, arguments from authority and biased observational studies. Critical rationalism instead holds that unbiased observation is not possible and a demarcation between natural and supernatural explanations is arbitrary; it instead proposes falsifiability as the landmark of empirical theories and falsification as the universal empirical method. Critical rationalism argues for the ability of science to increase the scope of testable knowledge, but at the same time against its authority, by emphasizing its inherent fallibility. It proposes that science should be content with the rational elimination of errors in its theories, not in seeking for their verification (such as claiming certain or probable proof or disproof; both the proposal and falsification of a theory are only of methodological, conjectural, and tentative character in critical rationalism). Instrumentalism rejects the concept of truth and emphasizes merely the utility of theories as instruments for explaining and predicting phenomena.

Biologist Stephen J. Gould maintained that certain philosophical propositions—i.e., 1) uniformity of law and 2) uniformity of processes across time and space—must first be assumed before you can proceed as a scientist doing science. Gould summarized this view as follows: "You cannot go to a rocky outcrop and observe either the constancy of nature's laws nor the working of unknown processes. It works the other way around." You first assume these propositions and "then you go to the out crop of rock."

Pseudoscience, fringe science, and junk science

An area of study or speculation that masquerades as science in an attempt to claim a legitimacy that it would not otherwise be able to achieve is sometimes referred to as pseudoscience, fringe science, or "alternative science". Another term, junk science, is often used to describe scientific hypotheses or conclusions which, while perhaps legitimate in themselves, are believed to be used to support a position that is seen as not legitimately justified by the totality of evidence. A variety of commercial advertising, ranging from hype to fraud, may fall into this category.

There also can be an element of political or ideological bias on all sides of such debates. Sometimes, research may be characterized as "bad science", research that is well-intentioned but is seen as incorrect, obsolete, incomplete, or over-simplified expositions of scientific ideas. The term "scientific misconduct" refers to situations such as where researchers have intentionally misrepresented their published data or have purposely given credit for a discovery to the wrong person.

Philosophical critiques

Historian Jacques Barzun termed science "a faith as fanatical as any in history" and warned against the use of scientific thought to suppress considerations of meaning as integral to human existence. Many recent thinkers, such as Carolyn Merchant, Theodor Adorno and E. F. Schumacher considered that the 17th century scientific revolution shifted science from a focus on understanding nature, or wisdom, to a focus on manipulating nature, i.e. power, and that science's emphasis on manipulating nature leads it inevitably to manipulate people, as well. Science's focus on quantitative measures has led to critiques that it is unable to recognize important qualitative aspects of the world.

Philosopher of science Paul K Feyerabend advanced the idea of epistemological anarchism, which holds that there are no useful and exception-free methodological rules governing the progress of science or the growth of knowledge, and that the idea that science can or should operate according to universal and fixed rules is unrealistic, pernicious and detrimental to science itself. Feyerabend advocates treating science as an ideology alongside others such as religion, magic and mythology, and considers the dominance of science in society authoritarian and unjustified. He also contended (along with Imre Lakatos) that the demarcation problem of distinguishing science from pseudoscience on objective grounds is not possible and thus fatal to the notion of science running according to fixed, universal rules.

Feyerabend also criticized Science for not having evidence for its own philosophical precepts. Particularly the notion of Uniformity of Law and the Uniformity of Process across time and space. "We have to realize that a unified theory of the physical world simply does not exist" says Feyerabend, "We have theories that work in restricted regions, we have purely formal attempts to condense them into a single formula, we have lots of unfounded claims (such as the claim that all of chemistry can be reduced to physics), phenomena that do not fit into the accepted framework are suppressed; in physics, which many scientists regard as the one really basic science, we have now at least three different points of view...without a promise of conceptual (and not only formal) unification".

Professor Stanley Aronowitz scrutinizes science for operating with the presumption that the only acceptable criticisms of science are those conducted within the methodological framework that science has set up for itself. That science insists that only those who have been inducted into its community, through means of training and credentials, are qualified to make these criticisms. Aronowitz also alleges that while scientists consider it absurd that Fundamentalist Christianity uses biblical references to bolster their claim that the bible is true, scientists pull the same tactic by using the tools of science to settle disputes concerning its own validity.

Psychologist Carl Jung believed that though science attempted to understand all of nature, the experimental method imposed artificial and conditional questions that evoke equally artificial answers. Jung encouraged, instead of these 'artificial' methods, empirically testing the world in a holistic manner. David Parkin compared the epistemological stance of science to that of divination. He suggested that, to the degree that divination is an epistemologically specific means of gaining insight into a given question, science itself can be considered a form of divination that is framed from a Western view of the nature (and thus possible applications) of knowledge.

Several academics have offered critiques concerning ethics in science. In Science and Ethics, for example, the philosopher Bernard Rollin examines the relevance of ethics to science, and argues in favor of making education in ethics part and parcel of scientific training.

Media perspectives

The mass media face a number of pressures that can prevent them from accurately depicting competing scientific claims in terms of their credibility within the scientific community as a whole. Determining how much weight to give different sides in a scientific debate requires considerable expertise regarding the matter. Few journalists have real scientific knowledge, and even beat reporters who know a great deal about certain scientific issues may know little about other ones they are suddenly asked to cover.

http://en.wikipedia.org/wiki/Science

The National State Tax Service University of Ukraine

The National State Tax Service University of Ukraine is located on 23 hectares of a park zone, in 25 km from the capital city of Kyiv in the picturesque town of Irpin.

Having passed the way: technical school - college - institute - academy - National academy - university - National university, our educational establishment with rich 80 year history has become avunique higher educational institution of the early third millennium.

The University is a base educational institution of the state tax service of Ukraine; it has branches in the cities of Zhytomyr, Vinnitsa, Simferopol, Storozhynets (Chernivtsi region), Kamenets - Podilsky (Khmelnitsky region).

Our University trains bachelors, specialists, masters. More than 10 thousand young men and girls from all regions of Ukraine are engaged in the day time and correspondence form of training at the faculties of tax militia, law, accounting-economics, finance, taxation, correspondence study and military training.

The invaluable capital of the University is the pedagogical staff: among 540 highly skilled teachers there are 5 academicians, 55 doctors and 154 candidates of sciences, over 191 professors and docents.

In the structure of the University Kyiv financial and economic college operates for training junior specialists.

Among 500 highly skilled teachers there are 6 academicians, 60 doctors and more than 200 candidates of sciences, 55 professors and 137 associate professors.

Due to modern education-methodical and material bases educational process at the University is carried out at the level of modern education requirements. During its existence the high school has trained almost 60 thousand highly skilled experts.

The Academic council which totals 55 skilled science officers and teachers coordinates the research work. The university has the postgraduate study, specialized councils on training candidates of sciences in the sphere of economy and jurisprudence.

Scientific conferences including international ones are carried out on a regular basis. Irpin international pedagogical readings have become traditional events. Members of the scientific students and cadets organization repeatedly became winners of All-Ukrainian and international conferences.

Teachers of the University take part in the pedagogical experiment on introducing credit - modular system of the educational process organization. In our high school there is the Coordination council whose functions include support and generalization of the results of realizing Bologna declaration regulations.

The important role in educational process is played by the library which book fund totals over 170 thousand copies.

The Information-publishing center carries out release of the "Tax academy" newspaper and the "Naukovy visnyk" collection of scientific works, it removes video materials about our high school life, accompanies the University website, issues books and manuals.

Activity of the Student's administration covers training, private students' life, leisure, participation in public life, scientific researches, and amateur creativity.

"Suzirja" Cultural-art center of the National STS University of Ukraine consists of 20 many genres creative collectives and studios, it is a holiday of music, poetry and dance. The creative collective is known not only in Ukraine, but in Poland, Cyprus, Germany, Spain, the USA, and Canada as well.

Sportsmen who study and are brought up in the high school are known all over the world. Among the students of the university there are 20 sports masters of international class who were participants and prize-winners of Olympic Games, university games, the world championships, Europe and Ukraine.

At the university all conditions not only for training, but also for residing and rest have been created. The apartment houses for the faculty, Information centre, the students' hostel have been constructed. The educational areas of tax militia and military training faculties are being extended.

The high school has its own medicosanitary department consisting of the polyclinic branch, the day time hospital and clinical laboratory.

Life at the National STS university is raging. You are welcome to visit us, and you'll feel by yourselves the participants of these impetuous and interesting events.

The post address: National STS university of Ukraine, K.Marx str., 31, Irpin, Kyiv region, Ukraine, 08201

Phone: (+3804497) 57571 Fax: (+3804497) 60294

E-mail: admin@asta.edu.ua

Infotech

| The Shamanistic Tradition |

[Next] [Next]

Index Index

Prev Prev

|

|

The start of the modern science that we call "Computer Science" can be traced back to a long ago age where man still dwelled in caves or in the forest, and lived in groups for protection and survival from the harsher elements on the Earth. Many of these groups possessed some primitive form of animistic religion; they worshipped the sun, the moon, the trees, or sacred animals. Within the tribal group was one individual to whom fell the responsibility for the tribe's spiritual welfare. It was he or she who decided when to hold both the secret and public religious ceremonies, and interceded with the spirits on behalf of the tribe. In order to correctly hold the ceremonies to ensure good harvest in the fall and fertility in the spring, the shamans needed to be able to count the days or to track the seasons. From the shamanistic tradition, man developed the first primitive counting mechanisms -- counting notches on sticks or marks on walls.

| A Primitive Calendar |

Next Next

Index Index

Links Links

Prev Prev

|

|

From the caves and the forests, man slowly evolved and built structures such as Stonehenge. Stonehenge, which lies 13km north of Salisbury, England, is believed to have been an ancient form of calendar designed to capture the light from the summer solstice in a specific fashion. The solstices have long been special days for various religious groups and cults. Archeologists and anthropologists today are not quite certain how the structure, believed to have been built about 2800 B.C., came to be erected since the technology required to join together the giant stones and raise them upright seems to be beyond the technological level of the Britons at the time. It is widely believed that the enormous edifice of stone may have been erected by the Druids. Regardless of the identity of the builders, it remains today a monument to man's intense desire to count and to track the occurrences of the physical world around him.

| A Primitive Calculator |

Next Next

Index Index

Prev Prev

|

|

Meanwhile in Asia, the Chinese were becoming very involved in commerce with the Japanese, Indians, and Koreans. Businessmen needed a way to tally accounts and bills. Somehow, out of this need, the abacus was born. The abacus is the first true precursor to the adding machines and computers which would follow. It worked somewhat like this:

The value assigned to each pebble (or bead, shell, or stick) is determined not by its shape but by its position: one pebble on a particular line or one bead on a particular wire has the value of 1; two together have the value of 2. A pebble on the next line, however, might have the value of 10, and a pebble on the third line would have the value of 100. Therefore, three properly placed pebbles--two with values of 1 and one with the value of 10--could signify 12, and the addition of a fourth pebble with the value of 100 could signify 112, using a place-value notational system with multiples of 10.

Thus, the abacus works on the principle of place-value notation: the location of the bead determines its value. In this way, relatively few beads are required to depict large numbers. The beads are counted, or given numerical values, by shifting them in one direction. The values are erased (freeing the counters for reuse) by shifting the beads in the other direction. An abacus is really a memory aid for the user making mental calculations, as opposed to the true mechanical calculating machines which were still to come.

Forefathers of Computing

For over a thousand years after the Chinese invented the abacus, not much progress was made to automate counting and mathematics. The Greeks came up with numerous mathematical formulae and theorems, but all of the newly discovered math had to be worked out by hand. A mathematician was often a person who sat in the back room of an establishment with several others and they worked on the same problem. The redundant personnel working on the same problem were there to ensure the correctness of the answer. It could take weeks or months of labourious work by hand to verify the correctness of a proposed theorem. Most of the tables of integrals, logarithms, and trigonometric values were worked out this way, their accuracy unchecked until machines could generate the tables in far less time and with more accuracy than a team of humans could ever hope to achieve.

The First Mechanical Calculator

Blaise Pascal, noted mathematician, thinker, and scientist, built the first mechanical adding machine in 1642 based on a design described by Hero of Alexandria (2AD) to add up the distance a carriage travelled. The basic principle of his calculator is still used today in water meters and modern-day odometers. Instead of having a carriage wheel turn the gear, he made each ten-teeth wheel accessible to be turned directly by a person's hand (later inventors added keys and a crank), with the result that when the wheels were turned in the proper sequences, a series of numbers was entered and a cumulative sum was obtained. The gear train supplied a mechanical answer equal to the answer that is obtained by using arithmetic.

This first mechanical calculator, called the Pascaline, had several disadvantages. Although it did offer a substantial improvement over manual calculations, only Pascal himself could repair the device and it cost more than the people it replaced! In addition, the first signs of technophobia emerged with mathematicians fearing the loss of their jobs due to progress.

The Difference Engine

Next Next

Index Index

Detour Detour

Links Links

Prev Prev

|

|

While Tomas of Colmar was developing the first successful commercial calculator, Charles Babbage realized as early as 1812 that many long computations consisted of operations that were regularly repeated. He theorized that it must be possible to design a calculating machine which could do these operations automatically. He produced a prototype of this "difference engine" by 1822 and with the help of the British government started work on the full machine in 1823. It was intended to be steam-powered; fully automatic, even to the printing of the resulting tables; and commanded by a fixed instruction program.

| The Conditional |

Next Next

Index Index

Prev Prev

|

|

In 1833, Babbage ceased working on the difference engine because he had a better idea. His new idea was to build an "analytical engine." The analytical engine was a real parallel decimal computer which would operate on words of 50 decimals and was able to store 1000 such numbers. The machine would include a number of built-in operations such as conditional control, which allowed the instructions for the machine to be executed in a specific order rather than in numerical order. The instructions for the machine were to be stored on punched cards, similar to those used on a Jacquard loom.

| Herman Hollerith |

Next Next

Index Index

Prev Prev

|

|

A step toward automated computation was the introduction of punched cards, which were first successfully used in connection with computing in 1890 by Herman Hollerith working for the U.S. Census Bureau. He developed a device which could automatically read census information which had been punched onto card. Surprisingly, he did not get the idea from the work of Babbage, but rather from watching a train conductor punch tickets. As a result of his invention, reading errors were consequently greatly reduced, work flow was increased, and, more important, stacks of punched cards could be used as an accessible memory store of almost unlimited capacity; furthermore, different problems could be stored on different batches of cards and worked on as needed. Hollerith's tabulator became so successful that he started his own firm to market the device; this company eventually became International Business Machines (IBM).

| Binary Representation |

Next Next

Index Index

Prev Prev

|

|

Hollerith's machine though had limitations. It was strictly limited to tabulation. The punched cards could not be used to direct more complex computations. In 1941, Konrad Zuse(*), a German who had developed a number of calculating machines, released the first programmable computer designed to solve complex engineering equations. The machine, called the Z3, was controlled by perforated strips of discarded movie film. As well as being controllable by these celluloid strips, it was also the first machine to work on the binary system, as opposed to the more familiar decimal system.

The binary system is composed of 0s and 1s. A punch card with its two states--a hole or no hole-- was admirably suited to representing things in binary. If a hole was read by the card reader, it was considered to be a 1. If no hole was present in a column, a zero was appended to the current number. The total number of possible numbers can be calculated by putting 2 to the power of the number of bits in the binary number. A bit is simply a single occurrence of a binary number--a 0 or a 1. Thus, if you had a possible binary number of 6 bits, 64 different numbers could be generated. (2^(n-1))

Binary representation was going to prove important in the future design of computers which took advantage of a multitude of two-state devices such card readers, electric circuits which could be on or off, and vacuum tubes.

* Zuse died in January of 1996.

| Harvard Mark I |

Next Next

Index Index

Prev Prev

|

|

By the late 1930s punched-card machine techniques had become so well established and reliable that Howard Aiken, in collaboration with engineers at IBM, undertook construction of a large automatic digital computer based on standard IBM electromechanical parts. Aiken's machine, called the Harvard Mark I, handled 23-decimal-place numbers (words) and could perform all four arithmetic operations; moreover, it had special built-in programs, or subroutines, to handle logarithms and trigonometric functions. The Mark I was originally controlled from pre-punched paper tape without provision for reversal, so that automatic "transfer of control" instructions could not be programmed. Output was by card punch and electric typewriter. Although the Mark I used IBM rotating counter wheels as key components in addition to electromagnetic relays, the machine was classified as a relay computer. It was slow, requiring 3 to 5 seconds for a multiplication, but it was fully automatic and could complete long computations without human intervention. The Harvard Mark I was the first of a series of computers designed and built under Aiken's direction.

| Alan Turing |

Next Next

Index Index

Links Links

Prev Prev

|

|

Meanwhile, over in Great Britain, the British mathematician Alan Turing wrote a paper in 1936 entitled On Computable Numbers in which he described a hypothetical device, a Turing machine, that presaged programmable computers. The Turing machine was designed to perform logical operations and could read, write, or erase symbols written on squares of an infinite paper tape. This kind of machine came to be known as a finite state machine because at each step in a computation, the machine's next action was matched against a finite instruction list of possible states.

The Turing machine pictured here above the paper tape, reads in the symbols from the tape one at a time. What we would like the machine to do is to give us an output of 1 anytime it has read at least 3 ones in a row off of the tape. When there are not at least three ones, then it should output a 0. The reading and outputting can go on infinitely. The diagram with the labelled states is known a state diagram and provides a visual path of the possible states that the machine can enter, dependent upon the input. The red arrowed lines indicate an input of 0 from the tape to the machine. The blue arrowed lines indicate an input of 1. Output from the machine is labelled in neon green.

The Turing Machine

Next Next

Index Index

Links Links

Prev Prev

|

|

The machine starts off in the Start state. The first input is a 1 so we can follow the blue line to State 1; output is going to be 0 because three or more ones have not yet been read in. The next input is a 0, which leads the machine back to the starting state by following the red line. The read/write head on the Turing machine advances to the next input, which is a 1. Again, this returns the machine to State 1 and the read/write head advances to the next symbol on the tape. This too is also a 1, leading to State 2. The machine is still outputting 0's since it has not yet encountered three 1s in a row. The next input is also a 1 and following the blue line leads in to State 3, and the machine now outputs a 1 as it has read in at least 3 ones. From this point, as long as the machine keeps reading in 1s, it will stay in State 3 and continue to output 1s. If any 0s are encountered, the machine will return to the Start state and start counting 1s all over.

Turing's purpose was not to invent a computer, but rather to describe problems which are logically possible to solve. His hypothetical machine, however, foreshadowed certain characteristics of modern computers that would follow. For example, the endless tape could be seen as a form of general purpose internal memory for the machine in that the machine was able to read, write, and erase it--just like modern day RAM.

| ENIAC |

Next Next

Index Index

Prev Prev

|

|

Back in America, with the success of Aiken's Harvard Mark-I as the first major American development in the computing race, work was proceeding on the next great breakthrough by the Americans. Their second contribution was the development of the giant ENIAC machine by John W. Mauchly and J. Presper Eckert at the University of Pennsylvania. ENIAC (Electrical Numerical Integrator and Computer) used a word of 10 decimal digits instead of binary ones like previous automated calculators/computers. ENIAC also was the first machine to use more than 2,000 vacuum tubes, using nearly 18,000 vacuum tubes. Storage of all those vacuum tubes and the machinery required to keep the cool took up over 167 square meters (1800 square feet) of floor space. Nonetheless, it had punched-card input and output and arithmetically had 1 multiplier, 1 divider-square rooter, and 20 adders employing decimal "ring counters," which served as adders and also as quick-access (0.0002 seconds) read-write register storage.

The executable instructions composing a program were embodied in the separate units of ENIAC, which were plugged together to form a route through the machine for the flow of computations. These connections had to be redone for each different problem, together with presetting function tables and switches. This "wire-your-own" instruction technique was inconvenient, and only with some license could ENIAC be considered programmable; it was, however, efficient in handling the particular programs for which it had been designed. ENIAC is generally acknowledged to be the first successful high-speed electronic digital computer (EDC) and was productively used from 1946 to 1955. A controversy developed in 1971, however, over the patentability of ENIAC's basic digital concepts, the claim being made that another U.S. physicist, John V. Atanasoff, had already used the same ideas in a simpler vacuum-tube device he built in the 1930s while at Iowa State College. In 1973, the court found in favor of the company using Atanasoff claim and Atanasoff received the acclaim he rightly deserved.

John von Neumann

In 1945, mathematician John von Neumann undertook a study of computation that demonstrated that a computer could have a simple, fixed structure, yet be able to execute any kind of computation given properly programmed control without the need for hardware modification. Von Neumann contributed a new understanding of how practical fast computers should be organized and built; these ideas, often referred to as the stored-program technique, became fundamental for future generations of high-speed digital computers and were universally adopted. The primary advance was the provision of a special type of machine instruction called conditional control transfer--which permitted the program sequence to be interrupted and reinitiated at any point, similar to the system suggested by Babbage for his analytical engine--and by storing all instruction programs together with data in the same memory unit, so that, when desired, instructions could be arithmetically modified in the same way as data. Thus, data was the same as program.

As a result of these techniques and several others, computing and programming became faster, more flexible, and more efficient, with the instructions in subroutines performing far more computational work. Frequently used subroutines did not have to be reprogrammed for each new problem but could be kept intact in "libraries" and read into memory when needed. Thus, much of a given program could be assembled from the subroutine library. The all-purpose computer memory became the assembly place in which parts of a long computation were stored, worked on piecewise, and assembled to form the final results. The computer control served as an errand runner for the overall process. As soon as the advantages of these techniques became clear, the techniques became standard practice. The first generation of modern programmed electronic computers to take advantage of these improvements appeared in 1947.

This group included computers using random access memory (RAM), which is a memory designed to give almost constant access to any particular piece of information. These machines had punched-card or punched-tape input and output devices and RAMs of 1,000-word. Physically, they were much more compact than ENIAC: some were about the size of a grand piano and required 2,500 small electron tubes, far fewer than required by the earlier machines. The first- generation stored-program computers required considerable maintenance, attained perhaps 70% to 80% reliable operation, and were used for 8 to 12 years. Typically, they were programmed directly in machine language, although by the mid-1950s progress had been made in several aspects of advanced programming. This group of machines included EDVAC and UNIVAC, the first commercially available computers.

| EDVAC |

Next Next

Index Index

Prev Prev

|  Image taken originally from The Image Server (http://web.soi.city.ac.uk/archive/image/lists/computers.html)

Image taken originally from The Image Server (http://web.soi.city.ac.uk/archive/image/lists/computers.html)

|

EDVAC (Electronic Discrete Variable Automatic Computer) was to be a vast improvement upon ENIAC. Mauchly and Eckert started working on it two years before ENIAC even went into operation. Their idea was to have the program for the computer stored inside the computer. This would be possible because EDVAC was going to have more internal memory than any other computing device to date. Memory was to be provided through the use of mercury delay lines. The idea being that given a tube of mercury, an electronic pulse could be bounced back and forth to be retrieved at will--another two state device for storing 0s and 1s. This on/off switchability for the memory was required because EDVAC was to use binary rather than decimal numbers, thus simplifying the construction of the arithmetic units.

Technology Advances

Next Next

Index Index

Prev Prev

|

|

In the 1950s,two devices would be invented which would improve the computer field and cause the beginning of the computer revolution. The first of these two devices was the transistor. Invented in 1947 by William Shockley, John Bardeen, and Walter Brattain of Bell Labs, the transistor was fated to oust the days of vacuum tubes in computers, radios, and other electronics.

The vacuum tube, used up to this time in almost all the computers and calculating machines, had been invented by American physicist Lee De Forest in 1906. The vacuum tube worked by using large amounts of electricity to heat a filament inside the tube until it was cherry red. One result of heating this filament up was the release of electrons into the tube, which could be controlled by other elements within the tube. De Forest's original device was a triode, which could control the flow of electrons to a positively charged plate inside the tube. A zero could then be represented by the absence of an electron current to the plate; the presence of a small but detectable current to the plate represented a one.

Vacuum tubes were highly inefficient, required a great deal of space, and needed to be replaced often. Computers such as ENIAC had 18,000 tubes in them and housing all these tubes and cooling the rooms from the heat produced by 18,000 tubes was not cheap.. The transistor promised to solve all of these problems and it did so. Transistors, however, had their problems too. The main problem was that transistors, like other electronic components, needed to be soldered together. As a result, the more complex the circuits became, the more complicated and numerous the connections between the individual transistors and the likelihood of faulty wiring increased.

In 1958, this problem too was solved by Jack St. Clair Kilby of Texas Instruments. He manufactured the first integrated circuit or chip. A chip is really a collection of tiny transistors which are connected together when the transistor is manufactured. Thus, the need for soldering together large numbers of transistors was practically nullified; now only connections were needed to other electronic components. In addition to saving space, the speed of the machine was now increased since there was a diminished distance that the electrons had to follow.

The Altair

Next Next

Index Index

Prev Prev

|

|

In 1971, Intel released the first microprocessor. The microprocessor was a specialized integrated circuit which was able to process four bits of data at a time. The chip included its own arithmetic logic unit, but a sizable portion of the chip was taken up by the control circuits for organizing the work, which left less room for the data-handling circuitry. Thousands of hackers could now aspire to own their own personal computer. Computers up to this point had been strictly the legion of the military, universities, and very large corporations simply because of their enormous cost for the machine and then maintenance. In 1975, the cover of Popular Electronics featured a story on the "world's first minicomputer kit to rival commercial models....Altair 8800." The Altair, produced by a company called Micro Instrumentation and Telementry Systems (MITS) retailed for $397, which made it easily affordable for the small but growing hacker community.

The Altair was not designed for your computer novice. The kit required assembly by the owner and then it was necessary to write software for the machine since none was yet commercially available. The Altair had a 256 byte memory--about the size of a paragraph, and needed to be coded in machine code- -0s and 1s. The programming was accomplished by manually flipping switches located on the front of the Altair.

Creation of Microsoft

Two young hackers were intrigued by the Altair, having seen the article in Popular Electronics. They decided on their own that the Altair needed software and took it upon themselves to contact MITS owner Ed Roberts and offer to provide him with a BASIC which would run on the Altair. BASIC (Beginners All-purpose Symbolic Instruction Code) had originally been developed in 1963 by Thomas Kurtz and John Kemeny, members of the Dartmouth mathematics department. BASIC was designed to provide an interactive, easy method for upcoming computer scientists to program computers. It allowed the usage of statements such as print "hello" or let b=10. It would be a great boost for the Altair if BASIC were available, so Robert's agreed to pay for it if it worked. The two young hackers worked feverishly and finished just in time to present it to Roberts. It was a success. The two young hackers? They were William Gates and Paul Allen. They later went on to form Microsoft and produce BASIC and operating systems for various machines.

BASIC & Other Languages

BASIC was not the only game in town. By this time, a number of other specialized and general-purpose languages had been developed. A surprising number of today's popular languages have actually been around since the 1950s. FORTRAN, developed by a team of IBM programmers, was one of the first high- level languages--languages in which the programmer does not have to deal with the machine code of 0s and 1s. It was designed to express scientific and mathematical formulas. For a high-level language, it was not very easy to program in. Luckily, better languages came along.

In 1958, a group of computer scientists met in Zurich and from this meeting came ALGOL--ALGOrithmic Language. ALGOL was intended to be a universal, machine-independent language, but they were not successful as they did not have the same close association with IBM as did FORTRAN. A derivative of ALGOL-- ALGOL-60--came to be known as C, which is the standard choice for programming requiring detailed control of hardware. After that came COBOL--COmmon Business Oriented Language. COBOL was developed in 1960 by a joint committee. It was designed to produce applications for the business world and had the novice approach of separating the data descriptions from the actual program. This enabled the data descriptions to be referred to by many different programs.

In the late 1960s, a Swiss computer scientist, Niklaus Wirth, would release the first of many languages. His first language, called Pascal, forced programmers to program in a structured, logical fashion and pay close attention to the different types of data in use. He later followed up on Pascal with Modula-II and III, which were very similar to Pascal in structure and syntax.

The PC Explosion

Following the introduction of the Altair, a veritable explosion of personal computers occurred, starting with Steve Jobs and Steve Wozniak exhibiting the first Apple II at the First West Coast Computer Faire in San Francisco. The Apple II boasted built-in BASIC, colour graphics, and a 4100 character memory for only $1298. Programs and data could be stored on an everyday audio- cassette recorder. Before the end of the fair, Wozniak and Jobs had secured 300 orders for the Apple II and from there Apple just took off.

Also introduced in 1977 was the TRS-80. This was a home computer manufactured Tandy Radio Shack. In its second incarnation, the TRS-80 Model II, came complete with a 64,000 character memory and a disk drive to store programs and data on. At this time, only Apple and TRS had machines with disk drives. With the introduction of the disk drive, personal computer applications took off as a floppy disk was a most convenient publishing medium for distribution of software.

IBM, which up to this time had been producing mainframes and minicomputers for medium to large-sized businesses, decided that it had to get into the act and started working on the Acorn, which would later be called the IBM PC. The PC was the first computer designed for the home market which would feature modular design so that pieces could easily be added to the architecture. Most of the components, surprisingly, came from outside of IBM, since building it with IBM parts would have cost too much for the home computer market. When it was introduced, the PC came with a 16,000 character memory, keyboard from an IBM electric typewriter, and a connection for tape cassette player for $1265.

By 1984, Apple and IBM had come out with new models. Apple released the first generation Macintosh, which was the first computer to come with a graphical user interface(GUI) and a mouse. The GUI made the machine much more attractive to home computer users because it was easy to use. Sales of the Macintosh soared like nothing ever seen before. IBM was hot on Apple's tail and released the 286-AT, which with applications like Lotus 1-2-3, a spreadsheet, and Microsoft Word, quickly became the favourite of business concerns.

That brings us up to about ten years ago. Now people have their own personal graphics workstations and powerful home computers. The average computer a person might have in their home is more powerful by several orders of magnitude than a machine like ENIAC. The computer revolution has been the fastest growing technology in man's history.

PCs Today

As an example of the wonders of this modern-day technology, let's take a look at this presentation. The whole presentation from start to finish was prepared on a variety of computers using a variety of different software applications. An application is any program that a computer runs that enables you to get things done. This includes things like word processors for creating text, graphics packages for drawing pictures, and communication packages for moving data around the globe.

The colour slides that you have been looking at were prepared on an IBM 486 machine running Microsoft® Windows® 3.1. Windows is a type of operating system. Operating systems are the interface between the user and the computer, enabling the user to type high-level commands such as "format a:" into the computer, rather that issuing complex assembler or C commands. Windows is one of the numerous graphical user interfaces around that allows the user to manipulate their environment using a mouse and icons. Other examples of graphical user interfaces (GUIs) include X-Windows, which runs on UNIX® machines, or Mac OS X, which is the operating system of the Macintosh.

Once Windows was running, I used a multimedia tool called Freelance Graphics to create the slides. Freelance, from Lotus Development Corporation, allows the user to manipulate text and graphics with the explicit purpose of producing presentations and slides. It contains drawing tools and numerous text placement tools. It also allows the user to import text and graphics from a variety of sources. A number of the graphics used, for example, the shaman, are from clip art collections off of a CD-ROM.

The text for the lecture was also created on a computer. Originally, I used Microsoft® Word, which is a word processor available for the Macintosh and for Windows machines. Once I had typed up the lecture, I decided to make it available, slides and all, electronically by placing the slides and the text onto my local Web server.

The Web

The Web (or more properly, the World Wide Web) was developed at CERN in Switzerland as a new form of communicating text and graphics across the Internet making use of the hypertext markup language (HTML) as a way to describe the attributes of the text and the placement of graphics, sounds, or even movie clips. Since it was first introduced, the number of users has blossomed and the number of sites containing information and searchable archives has been growing at an unprecedented rate. It is now even possible to order your favourite Pizza Hut pizza in Santa Cruz via the Web!

Servers

The actual workings of a Web server are beyond the scope of this course but knowledge of two things is important: 1) In order to use the Web, someone needs to be running a Web server on a machine for which such a server exists; and 2) the local user needs to run an application program to connect to the server; this application is known as a client program. Server programs are available for many types of computers and operating systems, such as Apache for UNIX (and other operating systems), Microsoft Information Interchange Server (IIS) for Windows/NT, and WebStar for the Macintosh. Most client programs available today are capable of displaying images, playing music, or showing movies, and they make use of a graphic interface with a mouse. Common client programs include Netscape, Opera, and Microsoft Internet Explorer (for Windows/Macintosh computers). There are also special clients that only display text, like lynx for UNIX systems, or help the visually impaired.

As mentioned earlier, servers contain files full of information about courses, research interests and games, for example. All of this information is formatted in a language called HTML (hypertext markup language.) HTML allows the user to insert formatting directives into the text, much like some of the first word processors for home computers. Anyone who is currently taking English 100 or has taken English 100 knows that there is a specific style and format for submitting essays. The same is true of HTML documents.

More information about HTML is now readily available everywhere, including in your local bookstore.

This brings us to the conclusion of this lecture. Please e-mail any comments you have to thelectureATeingangNOSPAMorg (replace AT with @ and NOSPAM with .).

httr://www.eingang.org/Lecture

httr://www.cambridge.org/elt/infotech

How Removable Storage Works

A tiny hard drive powers this removable storage device. See more computer memory pictures.

Removable storage has been around almost as long as the computer itself. Early removable storage was based on magnetic tape like that used by an audio cassette. Before that, some computers even used paper punch cards to store information!

We've come a long way since the days of punch cards. New removable storage devices can store hundreds of megabytes (and even gigabytes) of data on a single disk, cassette, card or cartridge. In this article, you will learn about the three major storage technologies. We'll also talk about which devices use each technology and what the future holds for this medium. But first, let's see why you would want removable storage.

Portable Memory

There are several reasons why removable storage is useful:

• Commercial software

• Making back-up copies of important information

• Transporting data between two computers

• Storing software and information that you don't need to access constantly

• Copying information to give to someone else

• Securing information that you don't want anyone else to access

Modern removable storage devices offer an incredible number of options, with storage capacities ranging from the 1.44 megabytes (MB) of a standard floppy to the upwards of 20-gigabyte (GB) capacity of some portable drives. All of these devices fall into one of three categories:

• Magnetic storage

• Optical storage

• Solid-state storage

In the following sections, we will take an in-depth look at each of these technologies.

Magnetic Storage

The most common and enduring form of removable-storage technology is magnetic storage. For example, 1.44-MB floppy-disk drives using 3.5-inch diskettes have been around for about 15 years, and they are still found on almost every computer sold today. In most cases, removable magnetic storage uses a drive, which is a mechanical device that connects to the computer. You insert the media, which is the part that actually stores the information, into the drive.

Just like a hard drive, the media used in removable magnetic-storage devices is coated with iron oxide. This oxide is a ferromagnetic material, meaning that if you expose it to a magnetic field it is permanently magnetized. The media is typically called a disk or a cartridge. The drive uses a motor to rotate the media at a high speed, and it accesses (reads) the stored information using small devices called heads.

Each head has a tiny electromagnet, which consists of an iron core wrapped with wire. The electromagnet applies a magnetic flux to the oxide on the media, and the oxide permanently "remembers" the flux it sees. During writing, the data signal is sent through the coil of wire to create a magnetic field in the core. At the gap, the magnetic flux forms a fringe pattern. This pattern bridges the gap, and the flux magnetizes the oxide on the media. When the data is read by the drive, the read head pulls a varying magnetic field across the gap, creating a varying magnetic field in the core and therefore a signal in the coil. This signal is then sent to the computer as binary data.

Magnetic: Direct Access

Magnetic disks or cartridges have a few things in common:

• They use a thin plastic or metal base material coated with iron oxide.

• They can record information instantly.

• They can be erased and reused many times.

• They are reasonably inexpensive and easy to use.

If you have ever used an audio cassette, you know that it has one big disadvantage -- it is a sequential device. The tape has a beginning and an end, and to move the tape to later song you have to use the fast forward and rewind buttons to find the start of the song. This is because the tape heads are stationary.

A disk or cartridge, like a cassette tape, is made from a thin piece of plastic coated with magnetic material on both sides. However, it is shaped like a disk rather than a long, thin ribbon. The tracks are arranged in concentric rings so the software can jump from "file 1" to "file 19" without having to fast forward through files 2 through 18. The disk or cartridge spins like a record and the heads move to the correct track, providing what is known as direct-access storage. Some removable devices actually have a platter of magnetic disks, similar to the set-up in a hard drive. Tape is still used for some long-term storage, such as backing up a server's hard drive, in which quick access to the data is not essential.

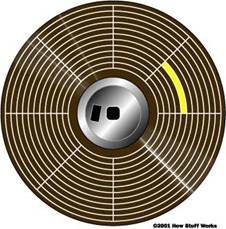

In the illustration above, you can see how the disk is divided into tracks (brown) and sectors (yellow).

The read/write heads ("writing" is saving new information to the storage media) do not touch the media when the heads are traveling between tracks. There is normally some type of mechanism that you can set to protect a disk or cartridge from being written to. For example, electronic optics check for the presence of an opening in the lower corner of a 3.5-inch diskette (or a notch in the side of a 5.25-inch diskette) to see if the user wants to prevent data from being written to it.

Magnetic: Zip

Over the years, magnetic technology has improved greatly. Because of the immense popularity and low cost of floppy disks, higher-capacity removable storage has not been able to completely replace the floppy drive. But there are a number of alternatives that have become very popular in their own right. One such example is the Zip from Iomega.

The Zip drive comes in several configurations, including SCSI, USB, parallel port and internal ATAPI.

The main thing that separates a Zip disk from a floppy disk is the magnetic coating used. On a Zip disk, the coating is of a much higher quality. The higher-quality coating means that the read/write head on a Zip disk can be significantly smaller than on a floppy disk (by a factor of 10 or so). The smaller head, in conjunction with a head-positioning mechanism that is similar to the one used in a hard disk, means that a Zip drive can pack thousands of tracks per inch on the disk surface. Zip drives also use a variable number of sectors per track to make the best use of disk space. All of these features combine to create a floppy disk that holds a huge amount of data -- up to 750 MB at the moment.

Magnetic: Cartridges

Another method of using magnetic technology for removable storage is essentially taking a hard disk and putting it in a self-contained case. One of the more successful products using this method is the Iomega Jaz. Each Jaz cartridge is basically a hard disk, with several platters, contained in a hard, plastic case. The cartridge contains neither the heads nor the motor for spinning the disk; both of these items are in the drive unit.

The current Jaz drive uses 2-GB cartridges, but also accepts the 1-GB cartridge used by the original Jaz.

Magnetic: Portable Drives

Completely external, portable hard drives are quickly becoming popular, due in a great part to USB technology. These units, like the ones inside a typical PC, have the drive mechanism and the media all in one sealed case. The drive connects to the PC via USB cable and, after the driver software is installed the first time, is automatically listed by Windows as an available drive.

This 20-GB Pockey Drive fits in the palm of your hand.

Another type of portable hard drive is called a microdrive. These tiny hard drives are built into PCMCIA cards that can be plugged into any device with a PCMCIA slot, such as a laptop computer.

This microdrive holds 340 MB and is about the size of a matchbox.

You can read more about magnetic storage in How Hard Disks Work and How Tape Recorders Work. To learn about optical storage technology, check out the next page.

Optical Storage

The optical storage device that most of us are familiar with is the compact disc (CD). A CD can store huge amounts of digital information (783 MB) on a very small surface that is incredibly inexpensive to manufacture. The design that makes this possible is a simple one: The CD surface is a mirror covered with billions of tiny bumps that are arranged in a long, tightly wound spiral. The CD player reads the bumps with a precise laser and interprets the information as bits of data.

The spiral of bumps on a CD starts in the center. CD tracks are so small that they have to be measured in microns (millionths of a meter). The CD track is approximately 0.5 microns wide, with 1.6 microns separating one track from the next. The elongated bumps are each 0.5 microns wide, a minimum of 0.83 microns long and 125 nanometers (billionths of a meter) high.

Most of the mass of a CD is an injection-molded piece of clear polycarbonate plastic that is about 1.2 millimeters thick. During manufacturing, this plastic is impressed with the microscopic bumps that make up the long, spiral track. A thin, reflective aluminum layer is then coated on the top of the disc, covering the bumps. The tricky part of CD technology is reading all the tiny bumps correctly, in the right order and at the right speed. To do all of this, the CD player has to be exceptionally precise when it focuses the laser on the track of bumps.

When you play a CD, the laser beam passes through the CD's polycarbonate layer, reflects off the aluminum layer and hits an optoelectronic device that detects changes in light. The bumps reflect light differently than the flat parts of the aluminum layer, which are called lands. The optoelectronic sensor detects these changes in reflectivity, and the electronics in the CD-player drive interpret the changes as data bits.

The basic parts of a compact-disc player

Optical: CD-R/CD-RW

That is how a normal CD works, which is great for prepackaged software, but no help at all as removable storage for your own files. That's where CD-recordable (CD-R) and CD-rewritable (CD-RW) come in.

CD-R works by replacing the aluminum layer in a normal CD with an organic dye compound. This compound is normally reflective, but when the laser focuses on a spot and heats it to a certain temperature, it "burns" the dye, causing it to darken. When you want to retrieve the data you wrote to the CD-R, the laser moves back over the disc and thinks that each burnt spot is a bump. The problem with this approach is that you can only write data to a CD-R once. After the dye has been burned in a spot, it cannot be changed back.

CD-RW fixes this problem by using phase change, which relies on a very special mixture of antimony, indium, silver and tellurium. This particular compound has an amazing property: When heated to one temperature, it crystallizes as it cools and becomes very reflective; when heated to another, higher temperature, the compound does not crystallize when it cools and so becomes dull in appearance.

The Predator is a fast CD-RW drive from Iomega.

CD-RW drives have three laser settings to make use of this property:

• Read - The normal setting that reflects light to the optoelectronic sensor